Autonomy is not a binary capability. It exists in levels.

This concept is something many industries have learned the hard way. In networking, aviation, robotics, and even automotive systems, autonomy has always been introduced gradually—levels of independence matched to levels of trust and responsibility. AI is no different. If anything, the need for clear autonomy boundaries is even stronger in agentic systems because the consequences of a bad decision can multiply quickly.

So let’s talk about what autonomy levels really mean, why they matter, and how they shape the future of agentic AI.

1. Why Autonomy Needs Levels

When people imagine “autonomous AI,” they often picture a single jump: from model to agent. But real autonomy isn’t built in one step. No mature system is deployed with full freedom from day one. Autonomy grows with experience, data, feedback, and safety signals.

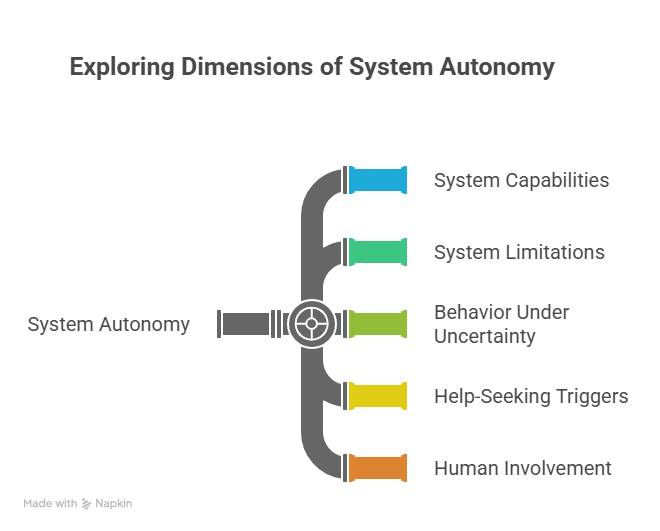

Autonomy levels help us define:

- What can the system do?

- What should the system not do?

- How it behaves under uncertainty?

- When it must ask for help?

- When must humans stay in the loop?

In practice, autonomy levels give us a shared language for capability, risk, and trust. They help teams agree on what “autonomous” means in a real system—not as a marketing term, but as an engineering discipline.

2. Thinking in Levels, Not Absolutes

-

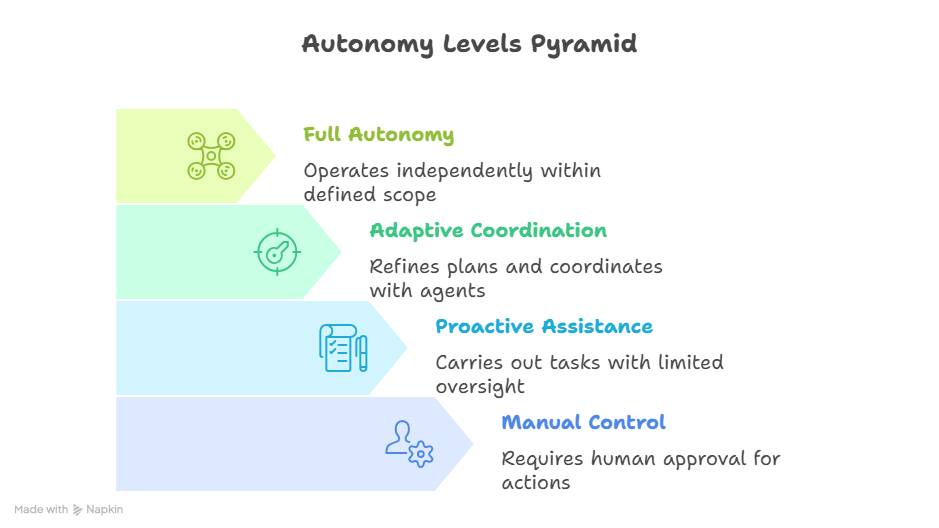

Level 0 – No Autonomy: The system observes and recommends, but all decisions and actions come from humans.

-

Level 1 – Assisted Autonomy: The system can perform narrow tasks but depends on humans for context and approvals.

-

Level 2 – Partial Autonomy: It can execute multi-step tasks, adapt to predictable changes, and recover from small errors but still requires frequent oversight.

-

Level 3 – Conditional Autonomy: The system acts independently in well-understood environments and escalates only when uncertainty or risk grows.

-

Level 4 – High Autonomy: The system handles complex workflows, revises plans, coordinates with other agents, and escalates infrequently.

-

Level 5 – Full Autonomy (within boundaries): The system manages goals, context, risk, and uncertainty on its own. “Full” autonomy still operates within clearly defined constraints.

At the lower levels, the AI acts like an assistant: it analyzes, suggests, and supports but never acts alone.

In the middle levels, the AI can take action, but always with oversight or in well-bounded contexts.

At the higher levels, the AI becomes capable of completing complex workflows with minimal or no supervision, as long as clear rules and guardrails exist.

This layered approach is not about limiting intelligence.

It’s about directing it safely.

At the lowest level, the system has no independence at all. It observes, analyzes, and suggests, but every meaningful action still requires human approval. This is similar to how many current LLM assistants work today: they support the operator, but they don’t take initiative.

As autonomy increases, the system becomes more proactive. It can carry out well-defined tasks, fill in missing details, and recover from simple mistakes. It still asks for help when conditions change, but it has enough structure to handle routine workflows with limited oversight.

Higher autonomy levels introduce adaptation. The agent can sense when its plan is no longer valid, refine it, coordinate with other agents, and escalate only when necessary. These systems behave more like teammates—they understand the goal, not just the task list.

At full autonomy, the system operates independently within a well-defined scope. It understands objectives, constraints, risks, and its own limitations. Crucially, full autonomy does not mean “unrestricted freedom.” It simply means the system is capable of managing uncertainty and making safe choices without continuous supervision.

In practice, these levels are not strict boundaries but stages of maturity. They give teams a shared vocabulary for capability and responsibility. They also make it clearer how and when an agent should move from one stage to another—by gaining better judgment, better context understanding, and stronger decision quality.

This layered approach to autonomy is what makes complex systems predictable. It gives them the structure they need to behave responsibly, especially in environments where a single bad step can trigger cascading failures. And as agentic AI matures, these autonomy levels will become the backbone of how we design, deploy, and trust autonomous systems.

3. How Autonomy Actually Works in Real Agnetic Systems

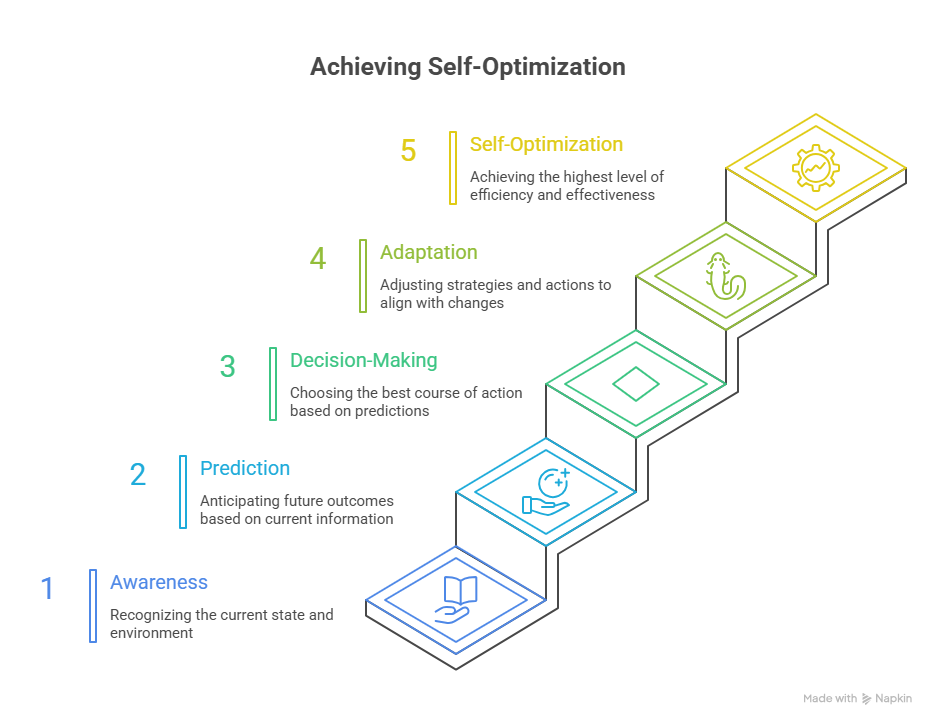

In agentic AI, autonomy is expressed through behavior, i.e., how the system interprets goals, executes plans, and deals with uncertainty.

When an AI receives a task, it must decide on the following questions, which form the backbone of autonomy levels:

- Do I have enough information to begin?

Is my plan safe and reasonable?

-

What should I do if I encounter something unexpected?

-

When is the situation too risky for me to continue?

-

Should I escalate to a human or another agent?

A lower-level autonomous agent might answer many of these questions conservatively: it asks the user for more details, seeks approval for each step, and stops when things become unclear.

A higher-level autonomous agent handles more on its own: it fills gaps, revises plans, maintains context, and escalates only when required.

And at the highest levels, the agent behaves almost like a skilled teammate—understanding objectives, recognizing constraints, managing uncertainty, and adapting plans dynamically.

But none of this can happen safely unless the system understands its own boundaries. That’s where autonomy levels matter most.

4. Lessons From Telecom and AI-Native Networks

Networked systems have been grappling with autonomy for years. In 5G and then followed by 6G AI-native networks, autonomy is not optional—it’s a necessity. Networks must respond to failures, optimize performance, predict traffic, adjust mobility parameters, and coordinate resources without waiting for humans. The concepts like autonomy, maturity, fallback behavior, and cross-validation also appear in next generation of AI-native network [Link].

But telecom systems never jump to full autonomy immediately. They evolve through maturity levels that mirror autonomy levels in AI:

Each stage builds on the previous one. Each increase in autonomy is backed by stronger validation, clearer guardrails, and more robust decision loops. This structured approach is the opposite of “let the agent figure it out.”

It’s deliberate, incremental, and safe. The same principles apply to agentic AI systems. If AI is expected to operate in real environments—workflows, data pipelines, operations, customer interactions—then autonomy levels become a practical tool, not a theoretical idea.

5. Autonomy Levels and Uncertainty

Higher autonomy doesn’t mean fewer questions—it means better questions.

More introspection.

Better judgment.

More awareness of risk

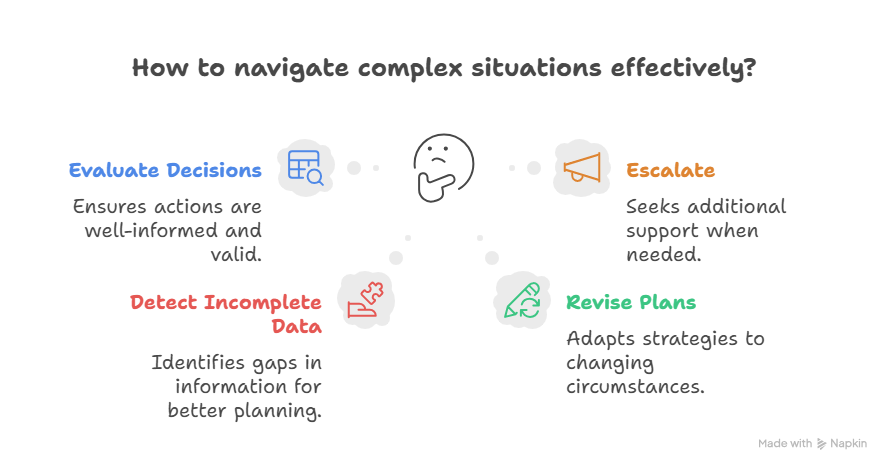

As autonomy increases, the system must be able to:

-

evaluate the strength of its own decisions

-

detect when data is incomplete

-

recognize when plans need revision

-

know when to escalate

Quality of Decision (QoD) becomes the mechanism that allows autonomy levels to function safely. Consequently, autonomy levels become the structure that makes QoD meaningful.

6. Designing With Autonomy Levels in Mind

7. The Future of Agnetic Systems is Layered Autonomy

- richer self-awareness

- clearer decision boundaries

- stronger validation loops

- predictable escalation behavior

- coordinated multi-agent reasoning

We are not building systems that simply execute tasks but systems that know when to act, when to pause, and when to ask for help. That is what real autonomy means and it is where the next generation of AI systems will differentiate themselves—not through raw intelligence, but through responsible judgment.

If you’re building agentic systems and want to talk about QoD frameworks, feel free to connect on LinkedIn or send an email.