How to design AI that knows when it’s right, when it’s wrong, and when it should ask for help

1. The Hidden Weakness in Today’s Agentic AI

And yet, most of these systems still fail in the same predictable way. This failure isn't usually due to a weak LLM, a poorly designed architecture, or missing tools. Instead, they fail because the system simply cannot judge its own decisions. It doesn’t know when the input data is incomplete, when the reasoning is shaky, or when an action is too risky. And, in most cases, it never asks for help. This is exactly the gap that Quality of Decision (QoD) fills.

If LLMs give us intelligence, then QoD gives us judgment—and judgment is what makes autonomy safe and scalable.

2. What QoD Really Means?

Think of it like this: A system might confidently recommend a product based on limited information, while QoD would recognize the potential for a mistake and trigger a check for more data.

Confidence is about how the model feels. QoD is about how strong the decision actually is. QoD gives the system a sense of self-awareness. It helps an agent recognize when it should move forward, when it should step back, when it should double-check with another agent, or when it should ask a human. In other words, QoD gives autonomy the ability to behave responsibly.

3. Where QoD Fits in the Agentic Architecture

- Recognizing poor reasoning

- Identifying missing context

- Detecting contradictions

- Avoiding risky actions

- Requesting help before making mistakes

4. Why QoD Matters in Real Systems: Avoiding Costly Mistakes

5. Telecom and AI-Native Networks: A Lesson in Judgment

6. How QoD Works Behind the Scenes: A Simple, Effective Approach

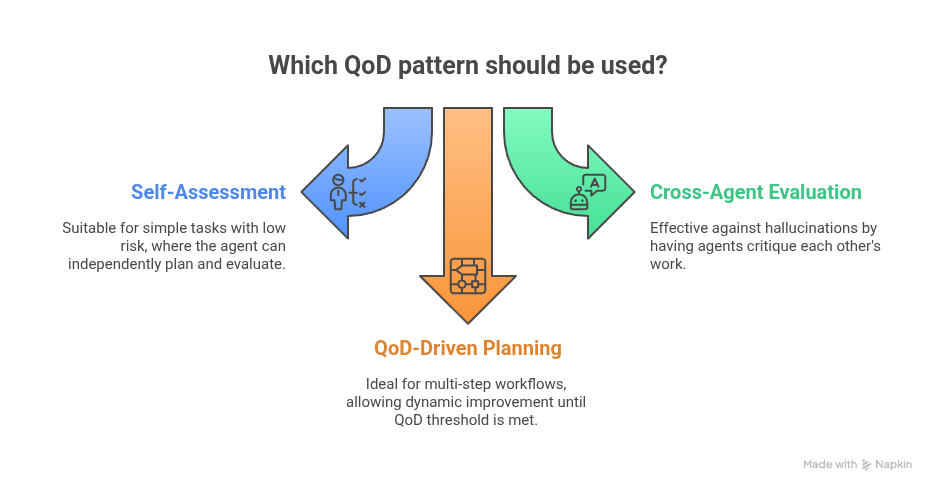

7. Patterns That Work in Practice: Building Reliable Systems

8. From a Leadership Perspective Approach

9. Bottom Line: QoD is the Bedrock of Real Autonomy

If you’re building agentic systems and want to talk about QoD frameworks, feel free to connect on LinkedIn or send an email.